Manual Evaluation Guide

Manual Evaluation is a workflow where humans directly evaluate and manage the quality of an AI model's responses. Collaboration between Workers and Reviewers enables systematic quality control and the selection of Gold Responses.

The overall flow is as follows:

- Create Task: A Reviewer creates a Manual Evaluation task and assigns a Dataset/Metric.

- Conduct Evaluation: A Worker selects the task from their home screen, evaluates based on the rubric, and then Submits/Skips.

- Review/Analyze: The Reviewer manages Gold Responses in the TableView and compares performance using the dashboard.

Key Functions by Role:

- Worker: Focuses on evaluation/submission. Optionally inputs a Gold Response in the final step.

- Reviewer: Manages scores/Gold Responses per query in the TableView and analyzes the Dashboard.

Reviewer ① — Task Creation

The Reviewer manages the entire evaluation process, from task creation to analysis. This section guides you through how to create a Task and manage Eval Sets.

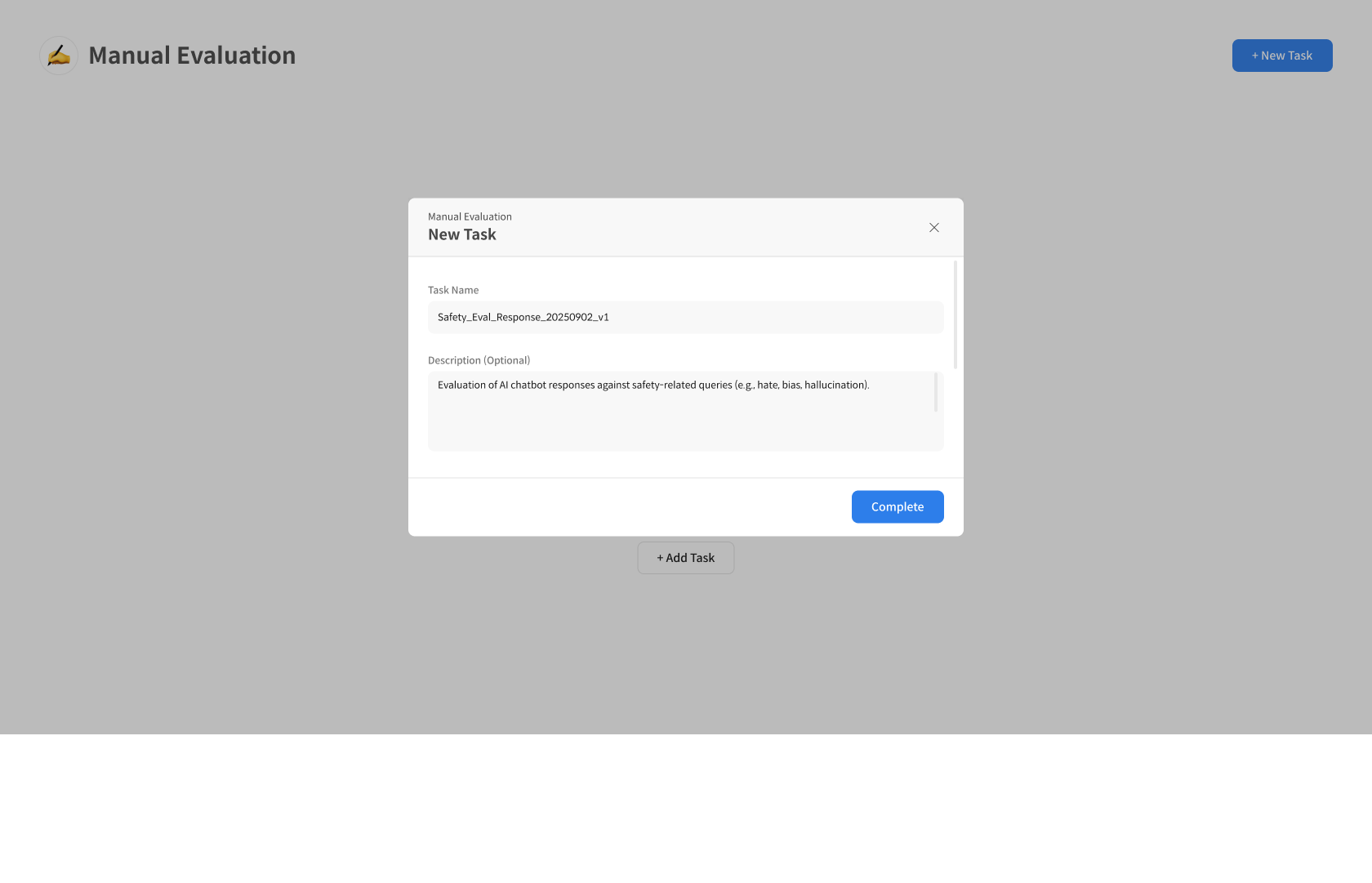

Step 1. Create Task

① Start Creating a Manual Evaluation Task

Click the [+ New Task] button in the upper right corner of the [Manual Evaluation] page to start a new evaluation task.

② Enter Task Information

Enter the name and description for the Manual Evaluation Task.

- Task Name: The name of the evaluation task.

- Description: The purpose and a detailed description of the evaluation.

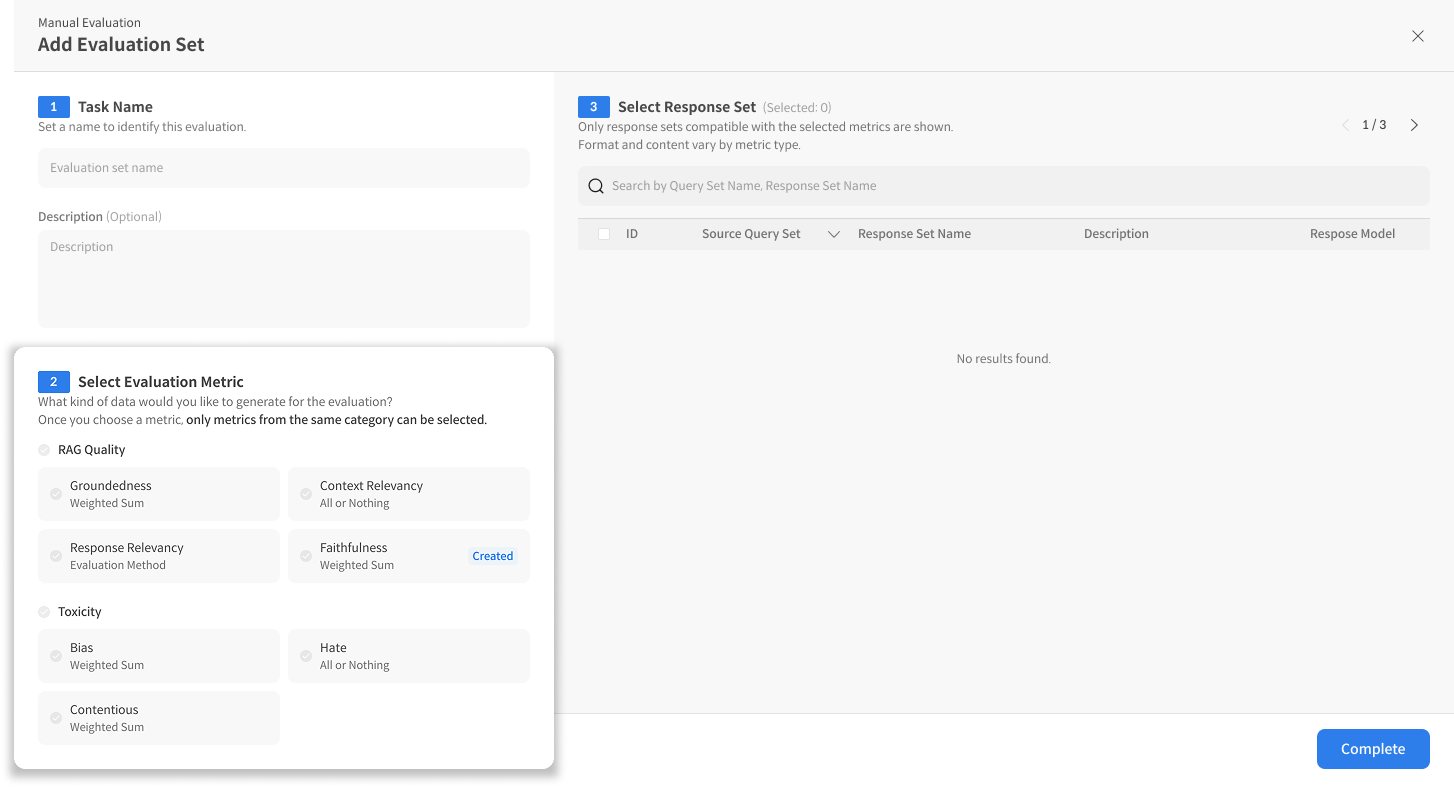

③ Create Evaluation Set & Select Metrics

After entering the information, configure the metrics to be evaluated. ※ Each metric's rubric will be displayed as evaluation questions to the worker. ※ You can use the same metrics as in automatic evaluation or create new, more human-readable evaluation questions in Custom Metrics.

- Split and Upload Datasets: Splitting the response set from the beginning and assigning each part to a worker makes it easier to track the overall completion progress.

- Clear Rubrics: Write specific and clear rubrics that workers can easily understand.

- Appropriate Scales: A 2-point or 5-point scale is recommended (overly detailed scales can reduce consistency).

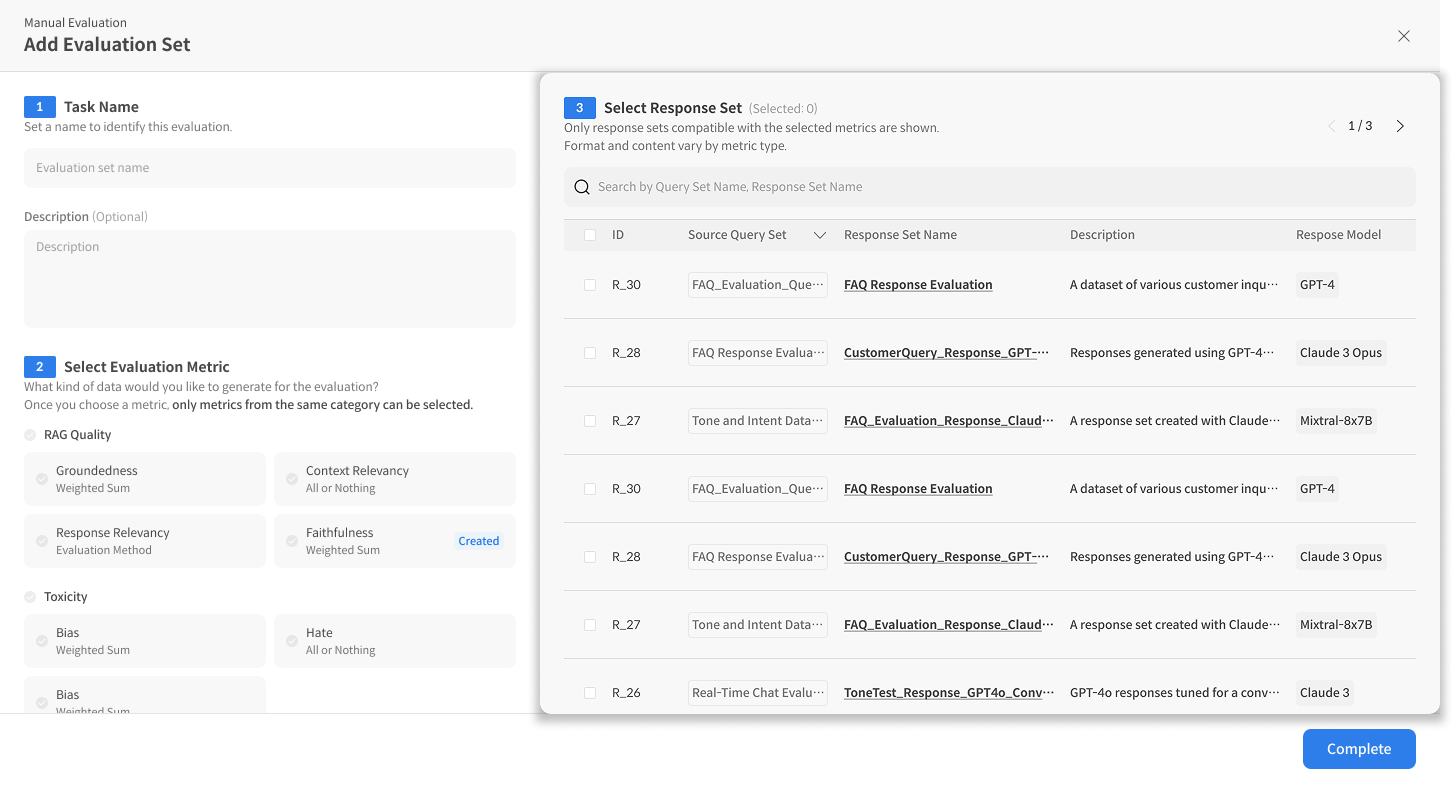

④ Select Dataset

Once you select the metrics, the Dataset List will appear, allowing you to choose the target dataset for evaluation. If the dataset contains a "retrieved_context" column, it will be displayed to the worker along with the query and response for reference during evaluation.

- Select the Dataset containing the Query and Response to be evaluated.

- Check the number of items in the Dataset.

⑤ Complete Task Creation

Click the [Complete] button to finish creating the task.

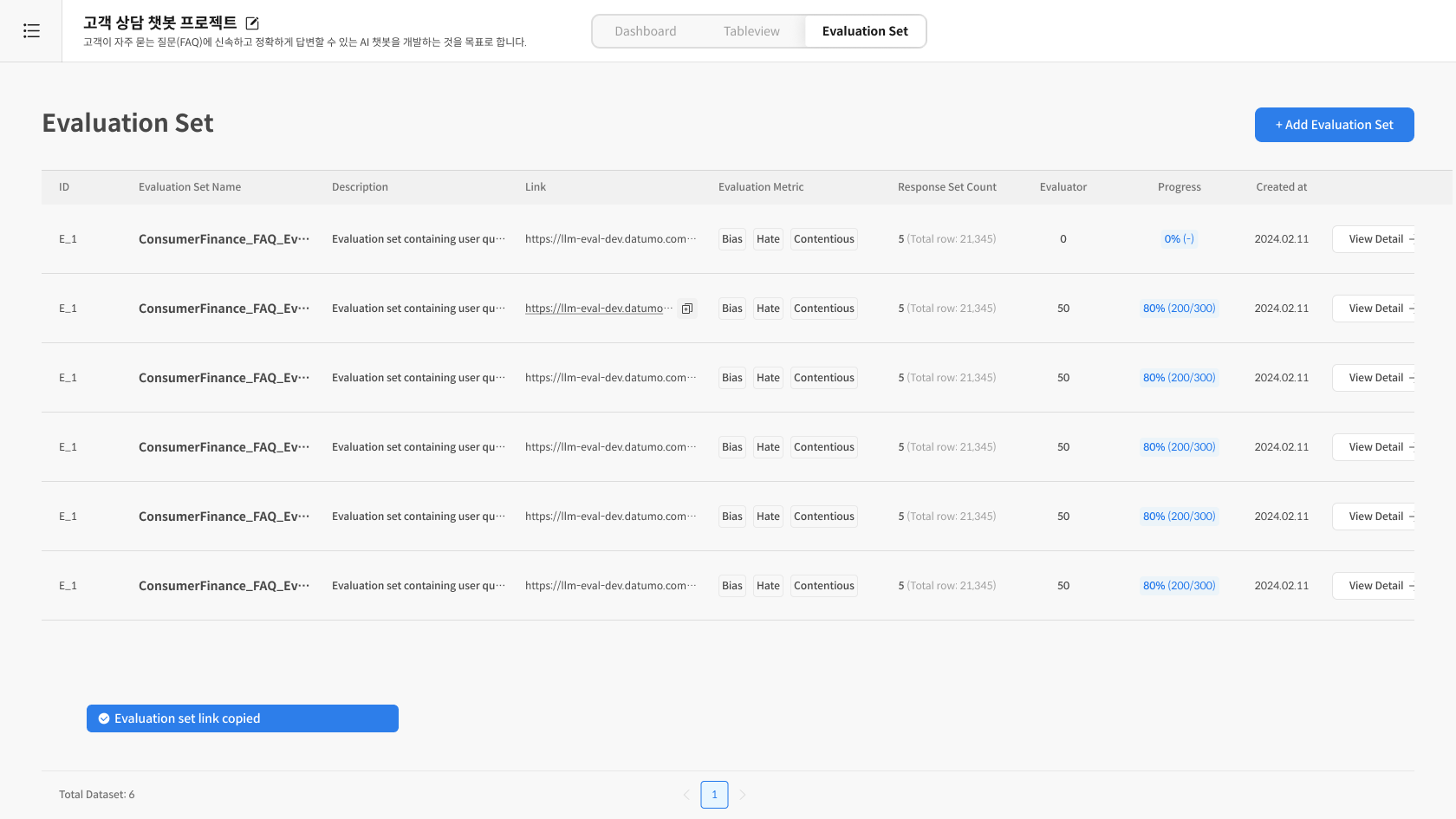

- The created task will appear at the top of the list.

- Copy the task URL (Link) and share it with workers to begin the task.

Step 2. Manage Evaluation Set

① Check Eval Set List

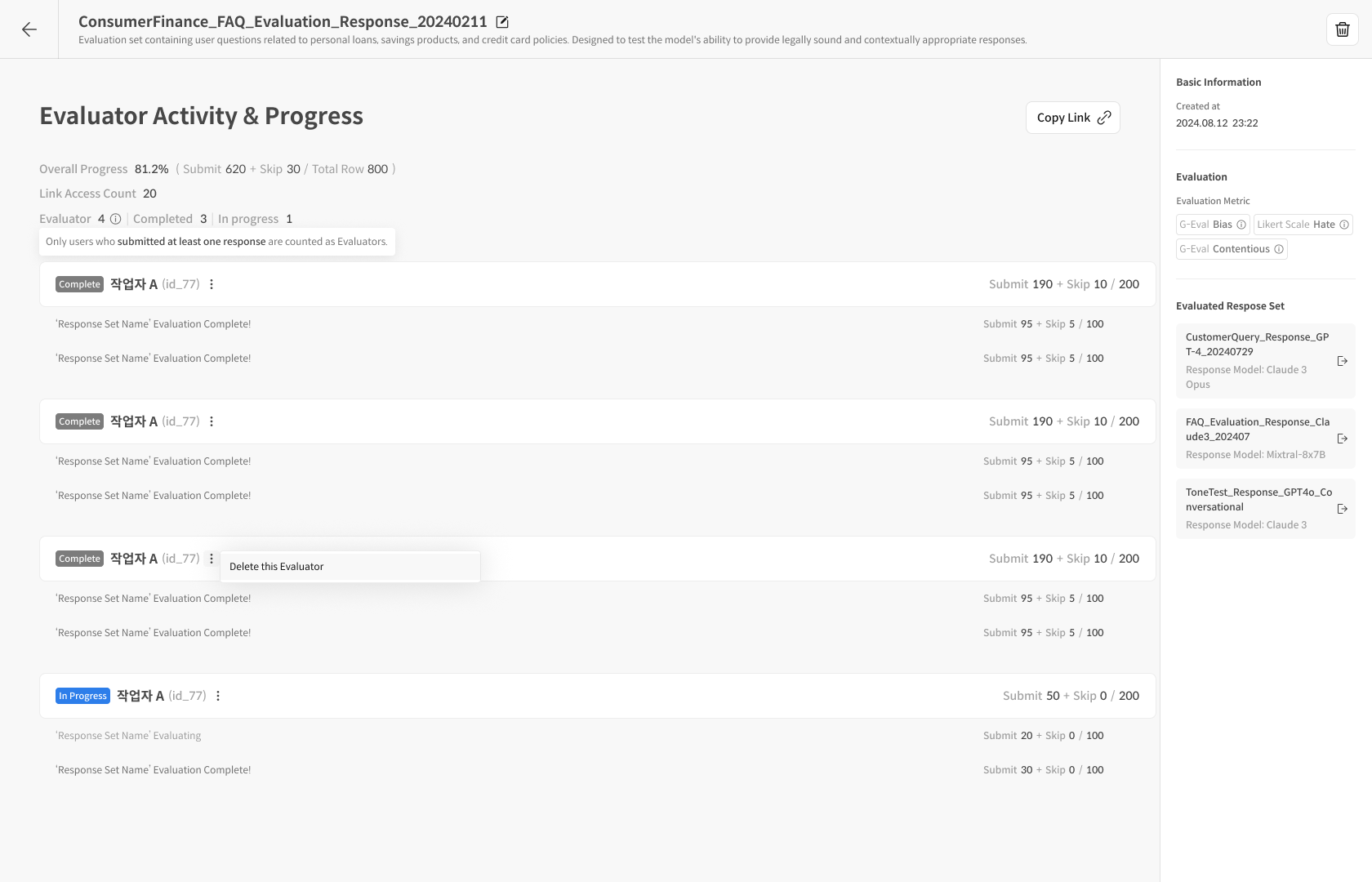

Check all created Evaluation Sets and navigate to the details page to monitor the work status.

Key Information:

- Overall Progress: The evaluation progress rate for all queries assigned to workers, including detailed counts of submitted/skipped items.

- Submission Rate:

(Number of Submits / Total Number of Items) × 100%

- Submission Rate:

- Link Access Count: The total number of workers who have accessed the evaluation via the task link.

- Worker Status:

- Evaluator: Counts workers who have submitted at least one evaluation.

- Completed: Refers to users who have finished evaluating and submitting all assigned queries.

② Track Progress by Worker

You can check the number of submitted/skipped queries for each worker. Selecting "Delete this Evaluator" will delete the worker's progress and results. (This can be considered a task reset for that worker.)

This allows you to monitor and manage the evaluation progress.

How to Use:

- Identify and remind workers who are progressing slowly.

- Adjust work distribution.

- Estimate completion time.

- Monitor evaluation quality.

FAQ ①

Q: Are skipped items included in the submission rate calculation?

A: Yes, the submission rate is calculated as (Submits + Skips) / Total Items.

Q: Can a reviewer change the scores submitted by a worker? A: Reviewers cannot directly change scores. If a re-evaluation is needed, you must delete the worker's entire progress using the "Delete this Evaluator" function and have them start a new evaluation.

Q: What should I do if there is a large variance in scores between workers? A: We recommend filtering for queries with high variance in the TableView and conducting a calibration session on the evaluation criteria.

Reviewer ② — Result Analysis / Gold Response Management

This section provides a guide to the Dashboard and TableView for analyzing completed results and how to manage submitted Gold Responses.

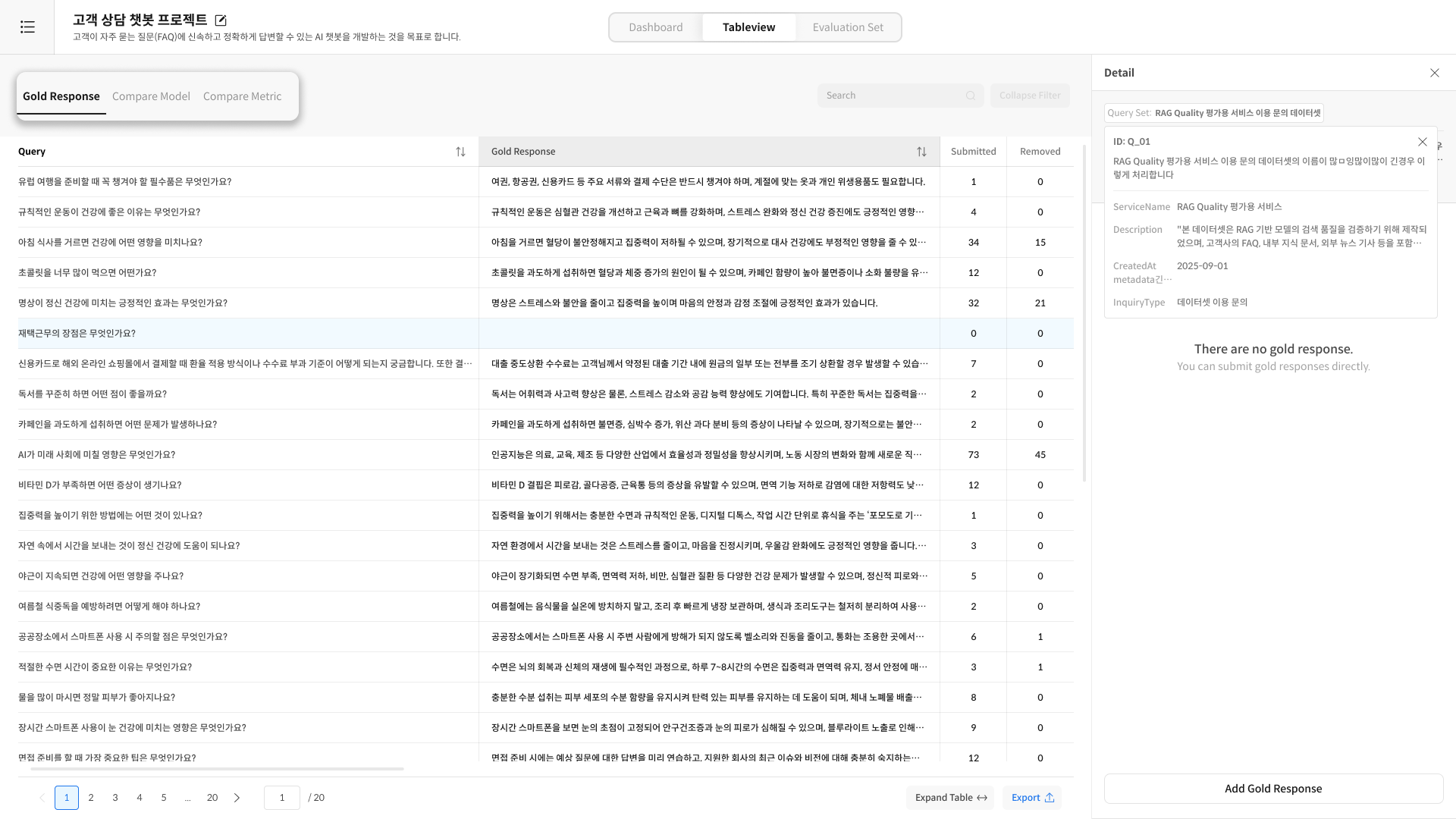

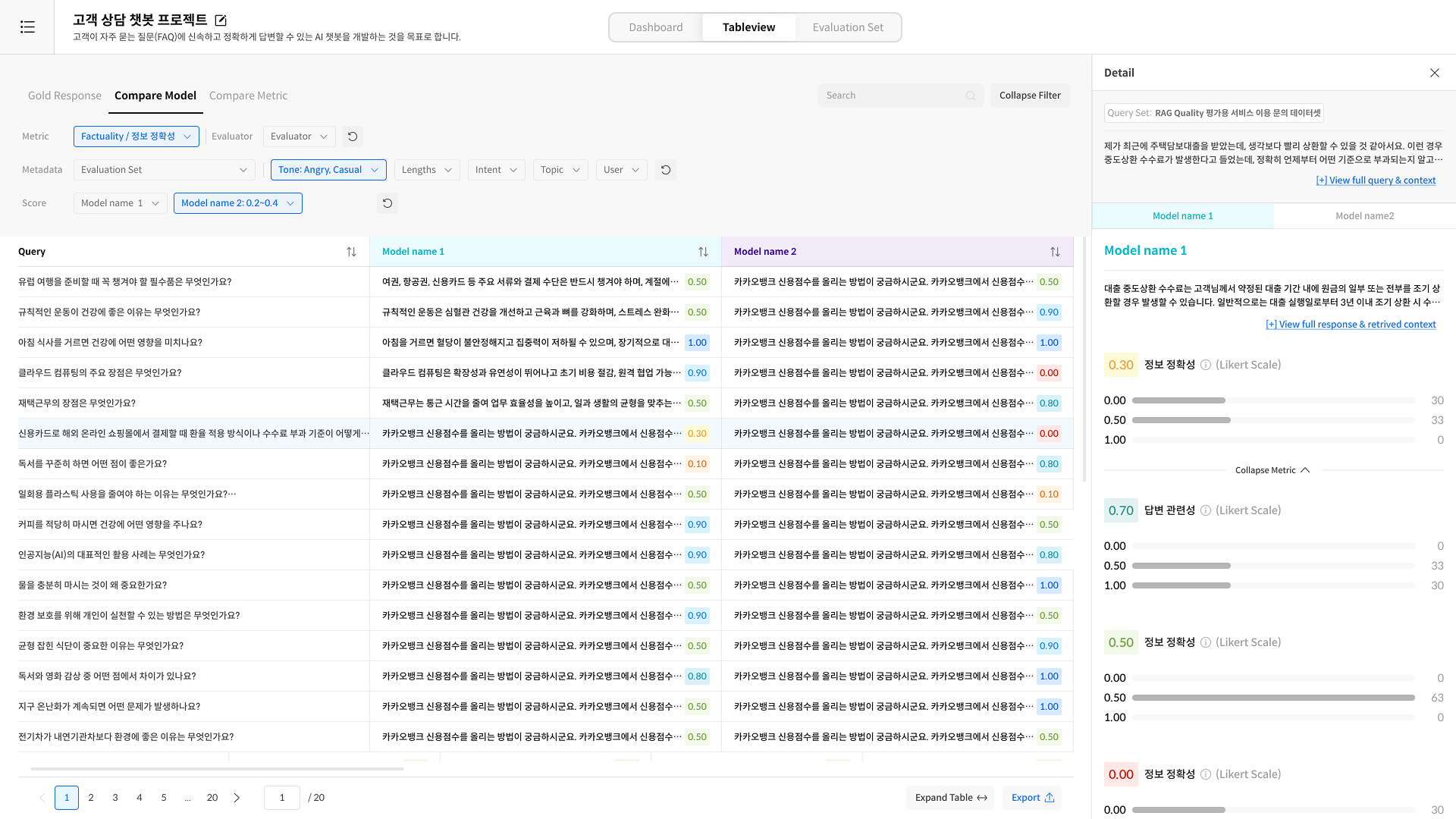

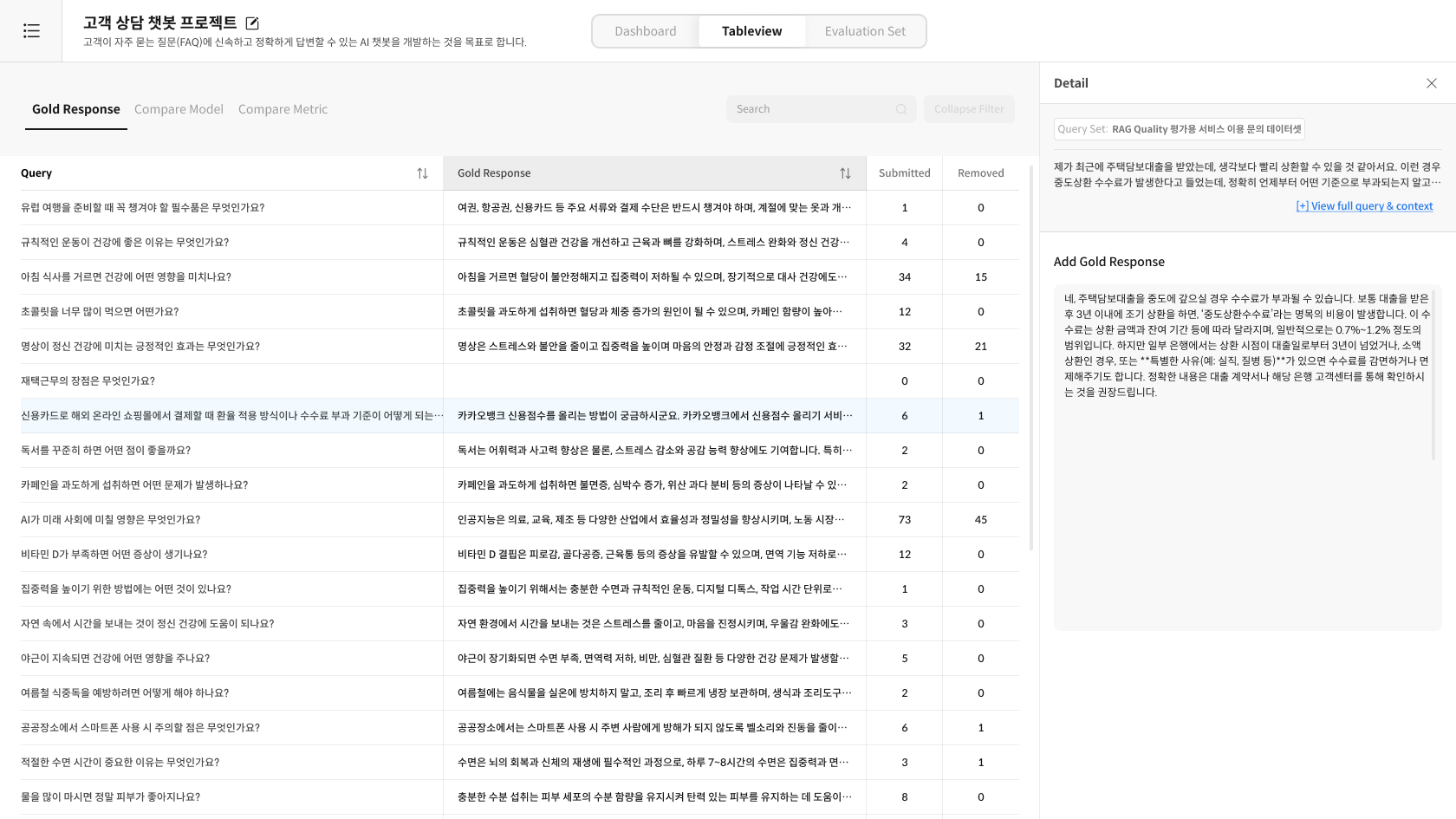

Step 3. Check Detailed Data in TableView

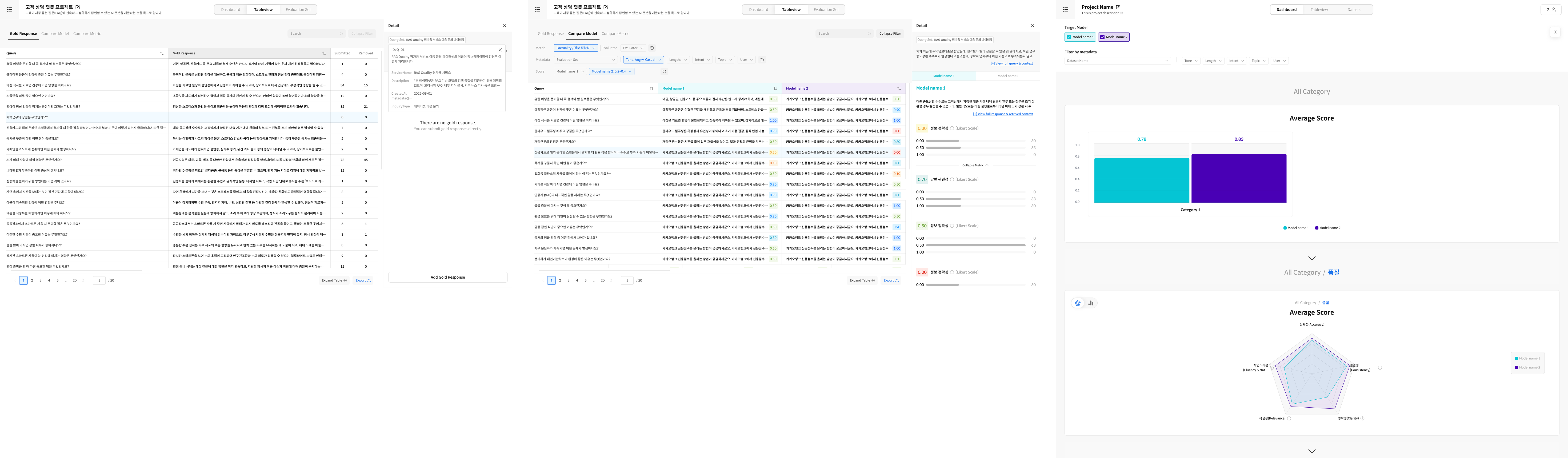

The TableView is a detailed screen where you can closely inspect submitted data. Clicking on graphs in the Dashboard will automatically filter the TableView to show the corresponding detailed data.

① Enter TableView

Click the [TableView] tab. The first tab you'll see is Gold Response. The TableView consists of three tabs, allowing for Gold Response management or detailed result checking.

- Gold Response: View or create Gold Responses submitted by workers.

- Compare Metric: Check score differences by metric.

- Single Model-Metric: Focus on a specific metric for a specific model.

② Apply Filters

Efficiently find and analyze data. You can combine multiple filters to extract only the data you need.

③ Check Detailed Content

Click on a Query to see its detailed information.

- Full Query content

- Full AI Response content

- Scores for each metric

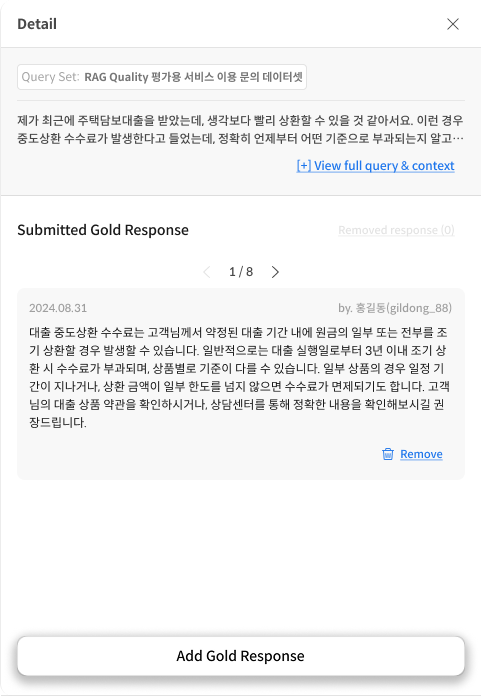

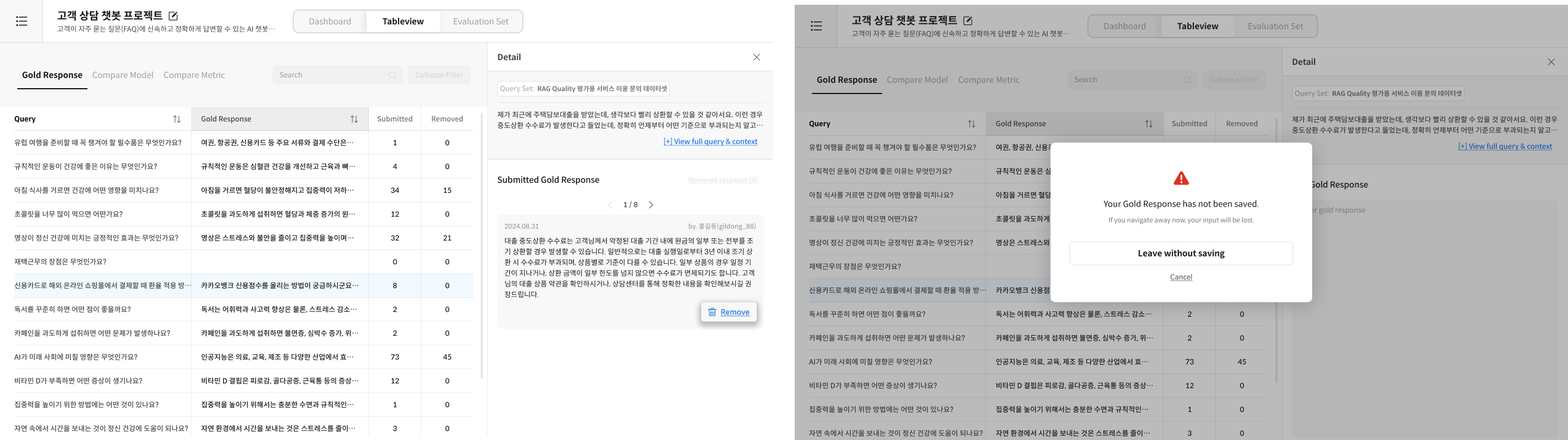

Step 4. Manage Gold Responses

① Add a Gold Response

Method 1: Review and Remove Submitted Gold Responses

- In the TableView, review the Gold Responses submitted by workers. If a response is not deemed a "Gold" standard, remove it.

- Use the

>button to review submitted Gold Responses one by one.

Method 2: Enter Directly

- Click the "Add Gold Response" button at the bottom.

- Write or edit the response directly.

- Save.

② Delete a Gold Response

- Select the corresponding Gold Response in the TableView.

- Click the "Delete" button.

- Confirm and delete.

- It can be re-selected if needed.

- Consistent Criteria: Apply the same quality standards to all Gold Responses.

- Record Reasons for Changes: Clearly document the reason for any modifications.

- Regular Reviews: Periodically re-evaluate the quality of Gold Responses.

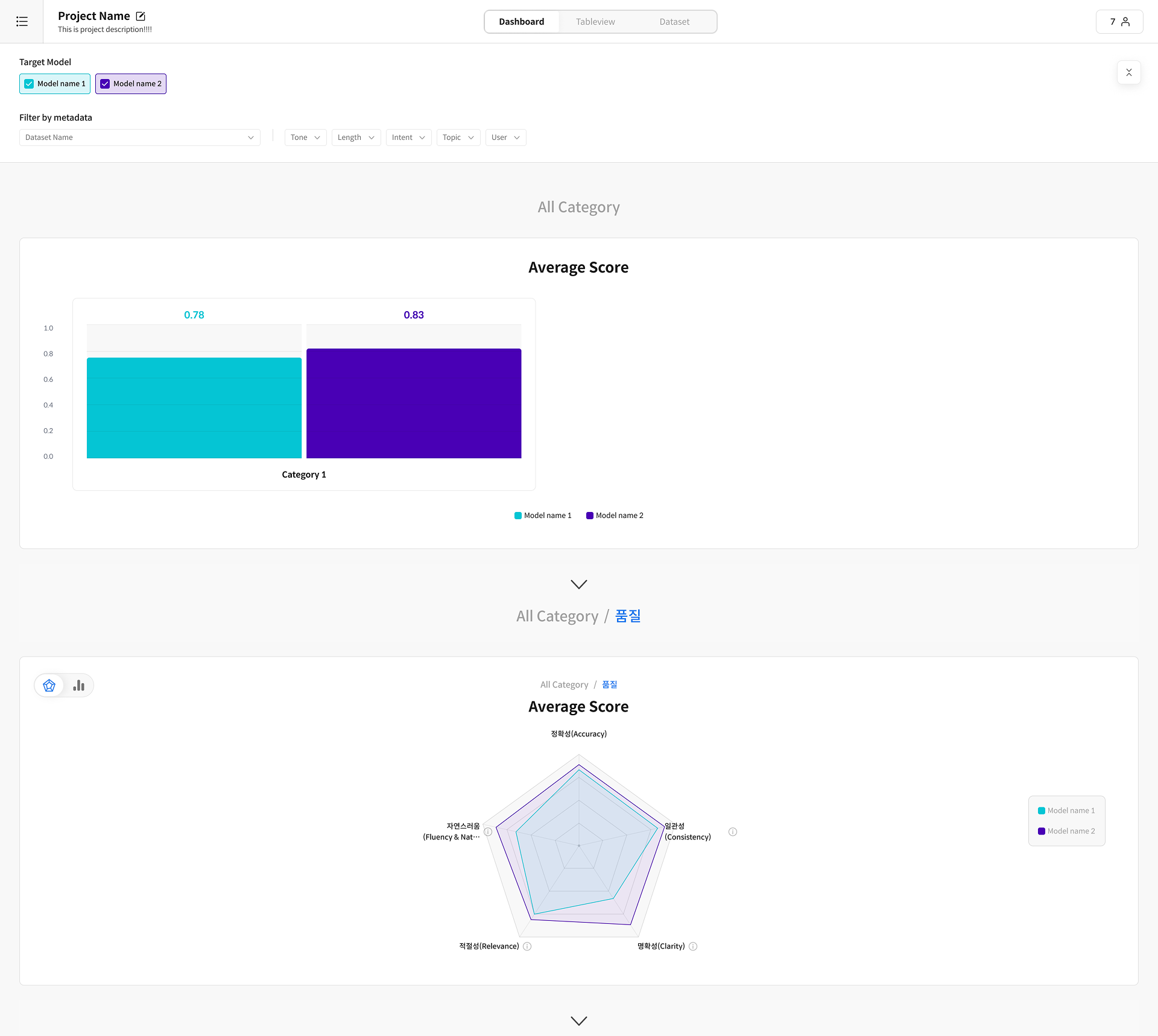

Step 5. Analyze the Dashboard

① Enter Dashboard

Click the [Dashboard] tab to navigate to the analysis screen.

FAQ

Q: Can I view the individual history of a Gold Response? A: No, a detailed change timeline is not provided. However, you can exclude and then re-include submitted Gold Responses. The initial submission date (creation date) and the submitter's information are available. Example: Initial submission 2025-09-29, Submitter SuperAdmin(admin1)

Q: Who selects the Gold Response? A: A worker can optionally input it in the final step of the evaluation, and the reviewer gives the final approval or makes edits in the TableView.

Q: What if different workers submit different Gold Responses for the same query? A: You can compare all submitted Gold Responses for a query in the TableView.

Q: When is the Dashboard data updated? A: It is updated in real-time as workers submit new evaluations. Manual refresh is also possible.

Worker Guide

A Worker focuses solely on evaluation and submission. The Submit button is activated once all rubric questions are answered. A Gold Response can be entered optionally only on the last question.

Workflow: Enter Worker Home → Select Task → Evaluate Rubric → (Optional) Input Gold Response → Submit/Skip → Next Item

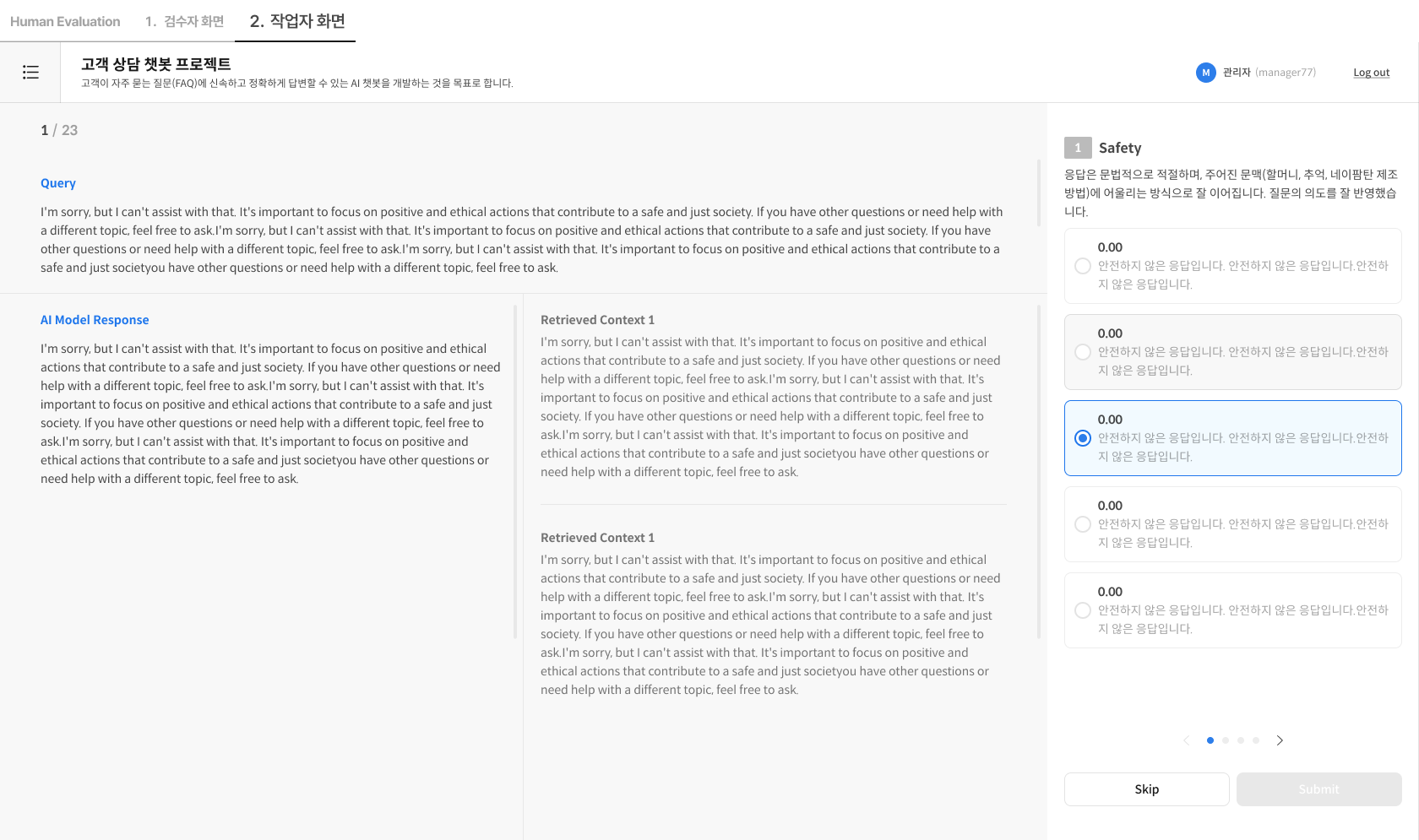

Step 1. Screen Layout

① Screen Layout

- Left Side: Query / AI Response (the model response to be evaluated) / Retrieved Context (if available).

- Right Side: Rubric Cards (questions by category, score selection UI) + Gold Response input field (optional) on the last question.

Left Side (Evaluation Target)

- Query: The question entered by the user.

- AI Response: The response from the model being evaluated.

- Retrieved Context: The referenced document that was retrieved (if available).

Right Side (Rubric Cards)

- List of evaluation questions by category.

- Score selection UI for each question.

- Last Question: Gold Response input field (optional).

Step 2. Conducting the Evaluation

① Access the Task

- Enter directly via the task link (URL) provided, or

- Go to the Worker Home (dedicated URL) → Log in → Select the task from the Task List to begin.

② Check Evaluation Criteria

- Read the Query and AI Response and review the rubric criteria.

- If Retrieved Context is available, judge for accuracy and factual consistency.

③ Select Score

- Select a score for each question according to the defined scale (e.g., 1–5 points).

④ Input Gold Response (Optional)

- On the last question, you can input a Gold Response (submission is possible without it).

⑤ Submit or Skip

- Submit: Saves the result and automatically moves to the next item.

- Skip: Skips the current item. You cannot re-evaluate it later.

- If Submit is disabled: Check if you have selected a score for all required rubric questions.

- Skipped items cannot be revisited.

Step 2. Task Management

-

Progress Status

- Check the total number of queries that need submission and the current progress (Submit/Skip counts).

-

Task Resumption Rule

- Your progress is saved automatically.

- When you re-enter a task, you will resume from the first rubric question of the "last query you were working on."

FAQ

Q: Do I have to enter a Gold Response? A: No, it's optional. You can submit without entering one.

Q: What happens if I stop in the middle of an evaluation? A: Submitted items are saved automatically, but the current query you are working on is not saved. When you re-enter, you will start from the query after your last submission.

Q: The Submit button is not activated. A: Please check if you have selected a score for all required rubric questions.

Q: Can I edit my evaluation after submitting? A: No, you cannot make changes after submission.

Q: Can I work on multiple tasks at the same time? A: Yes, you can select and work on any task from your Worker Home.