BEIR Leaderboard

BEIR (📊 Benchmark for Information Retrieval) evaluation is a standardized benchmark evaluation that can measure Retrieval quality (Recall, Precision, etc.) alongside Judge evaluation.

When you select a Dataset that meets specific conditions, the system provides an option to perform BEIR evaluation together. After evaluation completion, you can check the results in the BEIR Leaderboard at the top of the Dashboard.

When using a dataset that meets specific data conditions in RAGAs Task, standardized BEIR benchmark evaluation is automatically executed alongside Judge evaluation, and results can be viewed at the top of the dashboard when checking evaluation results.

Step 1. BEIR Evaluation Auto-Execution Conditions

For BEIR evaluation to run automatically, the Dataset must include the following special columns: ※ If there are multiple chunks or documents to reference, append numbers (1,2,...n) after the Excel column name to reference all columns.

-

Query Required Columns: Must include one of the following column names:

gold_chunk_n,gold_chunkn,gold_context_n,gold_contextn -

Response Set Required Columns: Must include one of the following column names:

retrieved_chunk_n,retrieved_chunkn,retrieved_context_n,retrieved_contextn

Step 2. Evaluation Flow

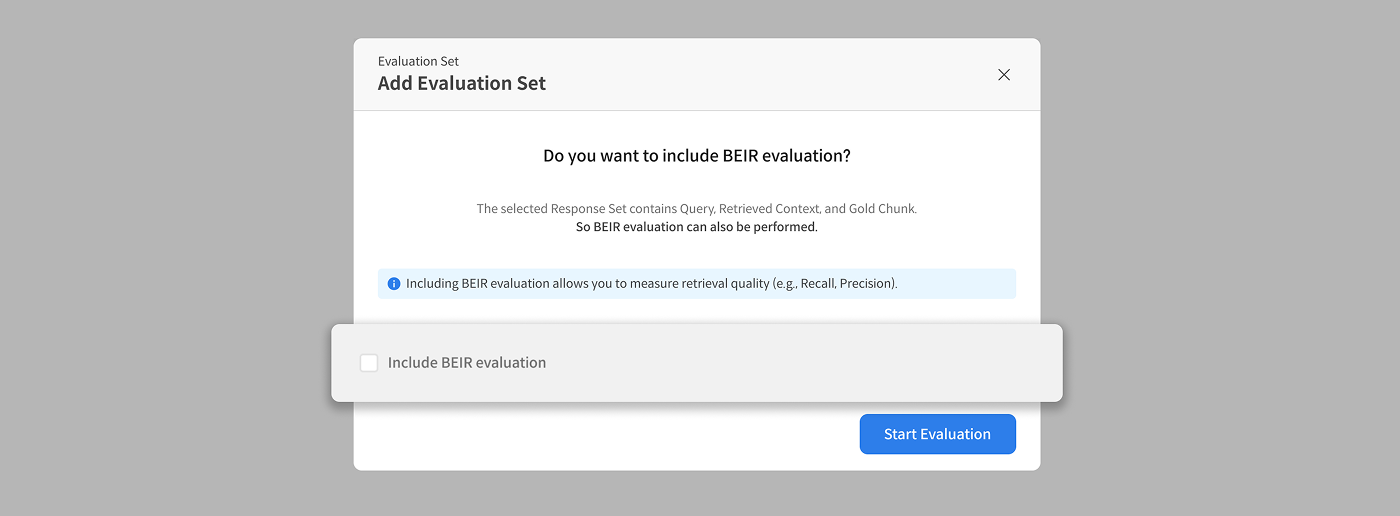

When you select a Dataset that meets the conditions, the system displays a modal to select whether to include BEIR evaluation. Users can directly choose whether to proceed with BEIR evaluation.

① Check the Include BEIR evaluation checkbox to perform BEIR evaluation together.

Modal screen for selecting whether to include BEIR evaluation

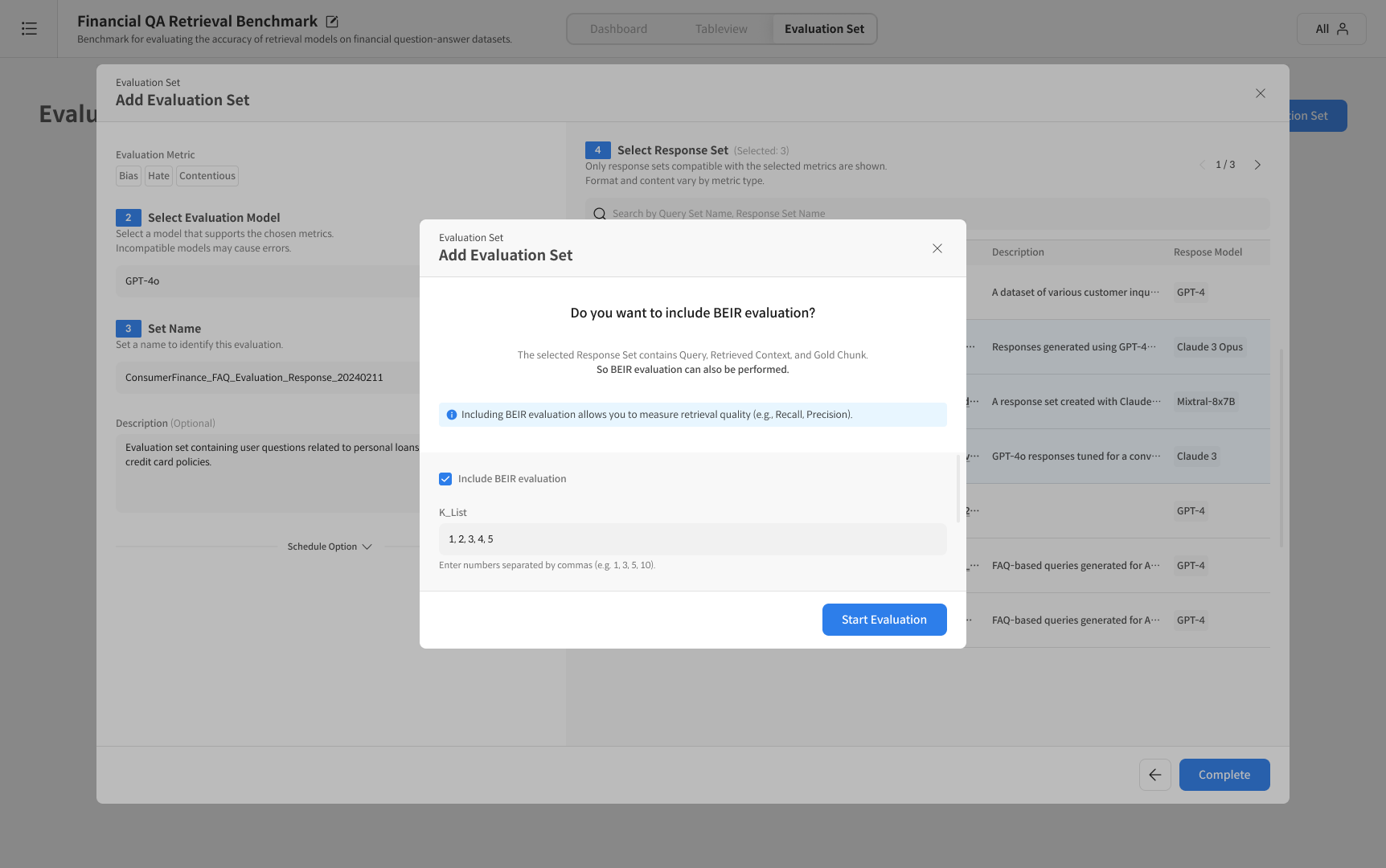

② When checked, the K-List input field becomes active.

- Example:

1, 2, 3, 5, 10(enter separated by commas)

③ Click Start Evaluation to run Judge evaluation + BEIR evaluation simultaneously.

- If not checked, only Judge evaluation will be performed.

K-List configuration screen

BEIR evaluation measures Retrieval quality (Precision, Recall, etc.) together.

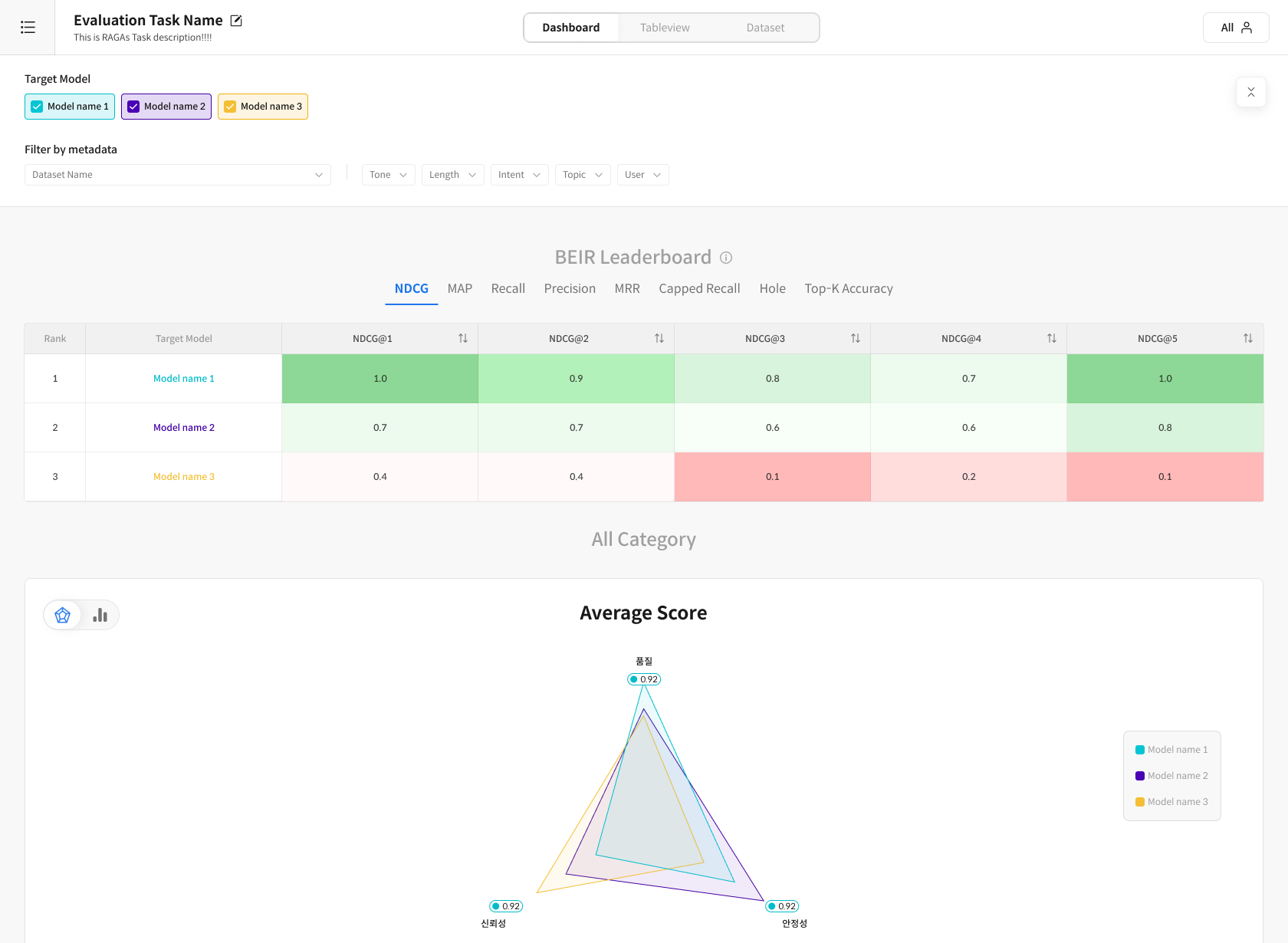

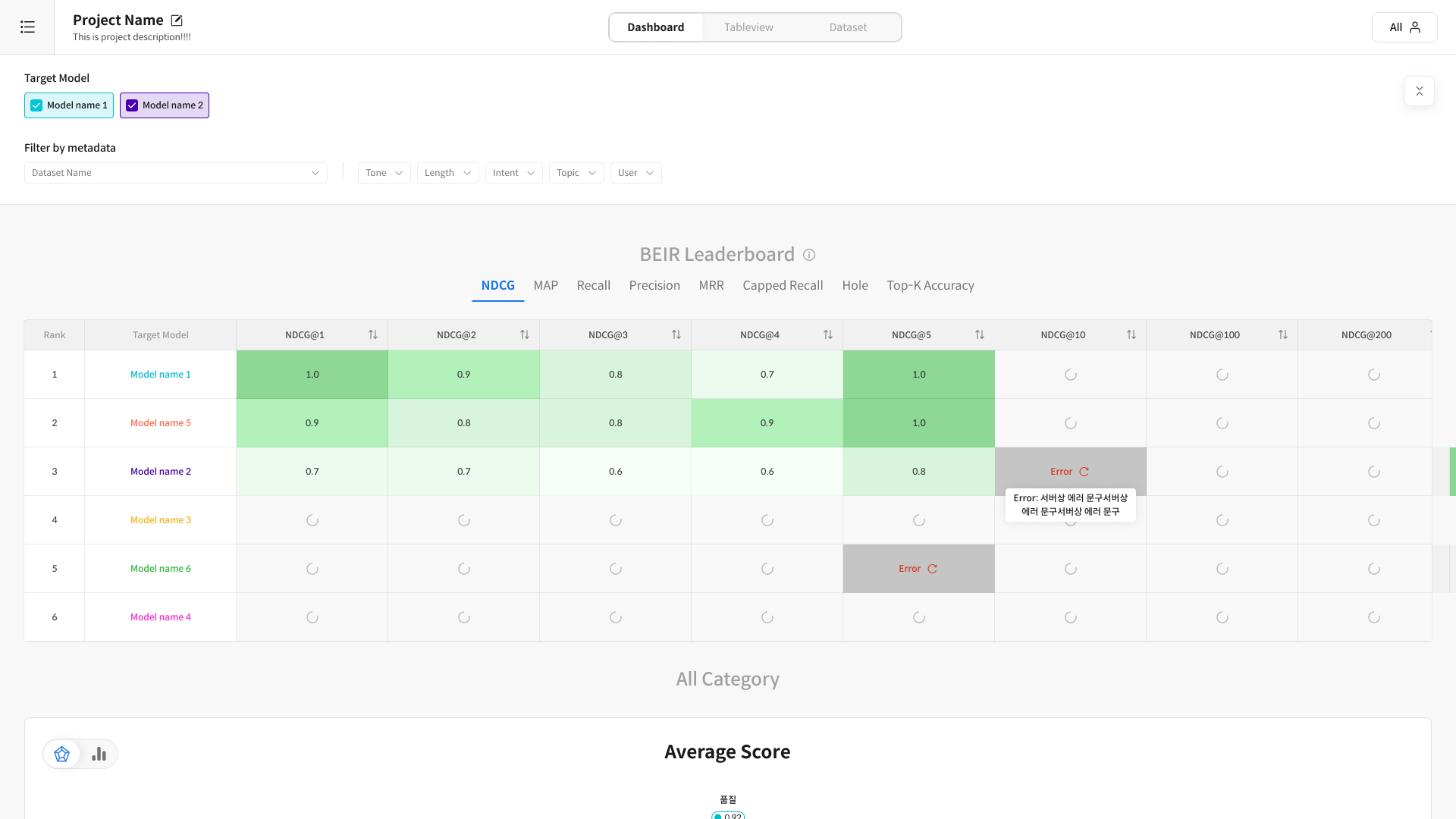

④ Check Results After evaluation completion, the BEIR Leaderboard is activated at the top of the dashboard, where you can check key metrics such as Precision / Recall / F1 based on the entered K-List.

BEIR Leaderboard screen example

❗Notes

- BEIR evaluation does not have a "Pause" feature.

- BEIR evaluation runs based on Response Set. If you selected the same Response Set, only 1 result will be displayed in the evaluation list.

- Results can be checked in the Dashboard along with Judge evaluation.